Model execution¶

a3main.py¶

The a3main.py file handles the following tasks:

Loading the data from train.json

Splitting the data into training and validation sets (in the ratio specified by trainValSplit)

Data Processing: strings are converted to lower case, and lengths of the reviews are calculated and added to the dataset (this allows for dynamic padding)

Vectorization, using torchtext GloVe vectors 6B.

Batching, using the BucketIterator() provided by torchtext so as to batch together reviews of similar length. This is not necessary for accuracy but will speed up training since the total sequence length can be reduced for some batches.

Model execution: The file student.py is completed to be run in conjunction with a3main.py by using the following command line:

python3 a3main.py

"""

a3main.py

"""

import torch

from torchtext.legacy import data

from config import device

import student

def main():

print("Using device: {}"

"\n".format(str(device)))

# Load the training dataset, and create a dataloader to generate a batch.

textField = data.Field(lower=True, include_lengths=True, batch_first=True,

tokenize=student.tokenise,

preprocessing=student.preprocessing,

postprocessing=student.postprocessing,

stop_words=student.stopWords)

labelField = data.Field(sequential=False, use_vocab=False, is_target=True)

dataset = data.TabularDataset('train.json', 'json',

{'reviewText': ('reviewText', textField),

'rating': ('rating', labelField),

'businessCategory': ('businessCategory', labelField)})

textField.build_vocab(dataset, vectors=student.wordVectors)

# Allow training on the entire dataset, or split it for training and validation.

if student.trainValSplit == 1:

trainLoader = data.BucketIterator(dataset, shuffle=True,

batch_size=student.batchSize,

sort_key=lambda x: len(x.reviewText),

sort_within_batch=True)

else:

train, validate = dataset.split(split_ratio=student.trainValSplit)

trainLoader, valLoader = data.BucketIterator.splits((train, validate),

shuffle=True, batch_size=student.batchSize,

sort_key=lambda x: len(x.reviewText),

sort_within_batch=True)

# Get model and optimiser from student.

net = student.net.to(device)

lossFunc = student.lossFunc

optimiser = student.optimiser

# Train.

for epoch in range(student.epochs):

runningLoss = 0

for i, batch in enumerate(trainLoader):

# Get a batch and potentially send it to GPU memory.

inputs = textField.vocab.vectors[batch.reviewText[0]].to(device)

length = batch.reviewText[1].to(device)

rating = batch.rating.to(device)

businessCategory = batch.businessCategory.to(device)

# PyTorch calculates gradients by accumulating contributions to them

# (useful for RNNs). Hence we must manually set them to zero before

# calculating them.

optimiser.zero_grad()

# Forward pass through the network.

ratingOutput, categoryOutput = net(inputs, length)

loss = lossFunc(ratingOutput, categoryOutput, rating, businessCategory)

# Calculate gradients.

loss.backward()

# Minimise the loss according to the gradient.

optimiser.step()

runningLoss += loss.item()

if i % 32 == 31:

print("Epoch: %2d, Batch: %4d, Loss: %.3f"

% (epoch + 1, i + 1, runningLoss / 32))

runningLoss = 0

# Save model.

torch.save(net.state_dict(), 'savedModel.pth')

print("\n"

"Model saved to savedModel.pth")

# Test on validation data if it exists.

if student.trainValSplit != 1:

net.eval()

correctRatingOnlySum = 0

correctCategoryOnlySum = 0

bothCorrectSum = 0

with torch.no_grad():

for batch in valLoader:

# Get a batch and potentially send it to GPU memory.

inputs = textField.vocab.vectors[batch.reviewText[0]].to(device)

length = batch.reviewText[1].to(device)

rating = batch.rating.to(device)

businessCategory = batch.businessCategory.to(device)

# Convert network output to integer values.

ratingOutputs, categoryOutputs = student.convertNetOutput(*net(inputs, length))

# Calculate performance

correctRating = rating == ratingOutputs.flatten()

correctCategory = businessCategory == categoryOutputs.flatten()

correctRatingOnlySum += torch.sum(correctRating & ~correctCategory).item()

correctCategoryOnlySum += torch.sum(correctCategory & ~correctRating).item()

bothCorrectSum += torch.sum(correctRating & correctCategory).item()

correctRatingOnlyPercent = correctRatingOnlySum / len(validate)

correctCategoryOnlyPercent = correctCategoryOnlySum / len(validate)

bothCorrectPercent = bothCorrectSum / len(validate)

neitherCorrectPer = 1 - correctRatingOnlyPercent \

- correctCategoryOnlyPercent \

- bothCorrectPercent

score = 100 * (bothCorrectPercent

+ 0.5 * correctCategoryOnlyPercent

+ 0.1 * correctRatingOnlyPercent)

print("\n"

"Rating incorrect, business category incorrect: {:.2%}\n"

"Rating correct, business category incorrect: {:.2%}\n"

"Rating incorrect, business category correct: {:.2%}\n"

"Rating correct, business category correct: {:.2%}\n"

"\n"

"Weighted score: {:.2f}".format(neitherCorrectPer,

correctRatingOnlyPercent,

correctCategoryOnlyPercent,

bothCorrectPercent, score))

if __name__ == '__main__':

main()

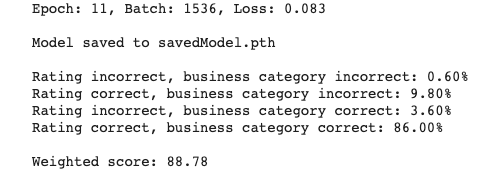

Results¶

Training and fine tuning the hyperparameters were performed by setting trainValSplit to 0.85. This allowed for confidence in the generalisation of our model as we could test the model’s performance on the validation set and adjust hyperparameter values accordingly. To finalise learning on the training dataset, trainValSplit was set to 0.99 to ensure our model is trained on as many data as possible. Increase in training data greatly improves the models overall performance which significantly improved accuracy in classifying business categories.

The final parameters of the Bi-LSTM model were set as follows:

number of expected features in the input (input_size) = 300

number of features in the hidden state (hidden_size) = 200

number of recurrent layers (num_layers) = 2

dropout(p=0.2) on the outputs of each LSTM layer except the last layer

dropout(p=0.3) prior to FC output layer

weight initialisation with kaiming using a normal distribution (mode=’fan_out’, nonlinearity=’relu)

batch size = 32

Adam optimiser with learning rate 0.001 and weight decay 1e-6

training epochs = 11

trainValSplit = 0.99.

The following final results were produced:

Note

Please refer to student.py, a3main.py, and config.py scripts, as well as the train.json training dataset in the GitHub repository for the complete bi-LSTM network and training codes.